Running Gemma 2 (2B) on a Raspberry Pi 4B with Ollama

October 14, 2025After attending GDG Cloud Thessaloniki DevFest 2025, I was inspired to experiment with Google’s new Gemma model. Surprisingly, running large language models (LLMs) like Gemma 2 (2B) on a Raspberry Pi 4B is entirely possible thanks to Ollama a lightweight framework designed for edge and local AI deployments. I’ll walk you through how to install Ollama, download Gemma 2, and run it directly on your Pi, along with some useful tips for setting up custom rulesets and behaviors to make the model truly your own.

This setup is perfect for experimentation, offline AI projects, and learning about LLMs on limited hardware.

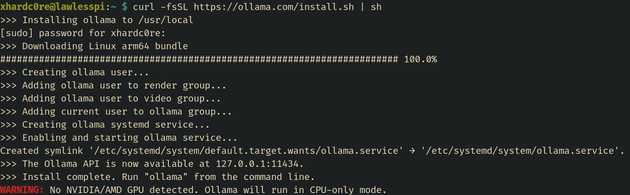

Install Ollama

Ollama allows you to run lightweight LLMs on devices like the Raspberry Pi. Install it using:

curl -fsSL https://ollama.com/install.sh | shThe installation may take a few minutes depending on your internet speed.

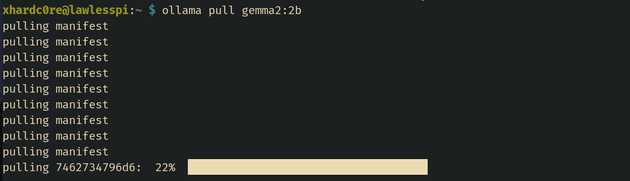

Download Gemma 2 (2B)

Once Ollama is installed, download the Gemma 2 model:

ollama pull gemma2:2b- The model is approximately 1.6GB.

- Ensure your Pi has enough storage space.

Run Gemma 2

Start the model in a terminal session:

ollama run gemma2:2b --verbose- You can interact with it directly via text.

- Gemma 2 can remember instructions or rules within the session. For example:

System prompt: Always follow these rules:

1. Answer politely

2. Provide short responses

3. Only respond in EnglishNote: On a Pi 4B, responses may take a few seconds due to CPU limitations.

Adding Custom Rules

To make Gemma 2 follow a persistent ruleset during a session:

- Use system prompts at session start.

- Prepend rules to each input prompt.

- Optionally, store rules in a file and load them automatically:

RULES=$(cat rules.txt)

ollama run gemma2:2b --prompt "$RULES\nUser: $USER_INPUT"Potential Projects

Even on limited hardware, you can use Gemma 2 for:

- Offline chatbot / personal assistant

- Story or creative writing

- IoT or home automation scripts

- Educational AI experiments

Performance Considerations

- RAM: 4GB limits long sessions and multitasking.

- CPU-only inference: No GPU acceleration; larger models will be slower.

- Response time: Expect a few seconds per prompt due to Pi CPU limits.